Contribute an ELG compatible service¶

This page describes how to contribute a language technology service to run on the cloud platform of the European Language Grid.

Currently, ELG supports the integration of tools/services that fall into one of the following broad categories:

Information Extraction (IE) : Services that take text and annotate it with metadata on specific segments, e.g. Named Entity Recognition (NER), the task of extracting persons, locations, and organizations from a given text.

Text Classification (TC) : Services that take text and return a classification for the given text from a finite set of classes, e.g. Text Categorization which is the task of categorizing text into (usually labelled) organized categories.

Machine Translation (MT) : Services that take text in one language and translate it into text in another language, possibly with additional metadata associated with each segment (sentence, phrase, etc.).

Automatic Speech Recognition (ASR) : Services that take audio as input and produce text (e.g., a transcription) as output, possibly with metadata associated with each segment.

Text-to-Speech Generation (TTS) : Services that take text as input and produce audio as output.

Overview: How an LT Service is integrated to ELG¶

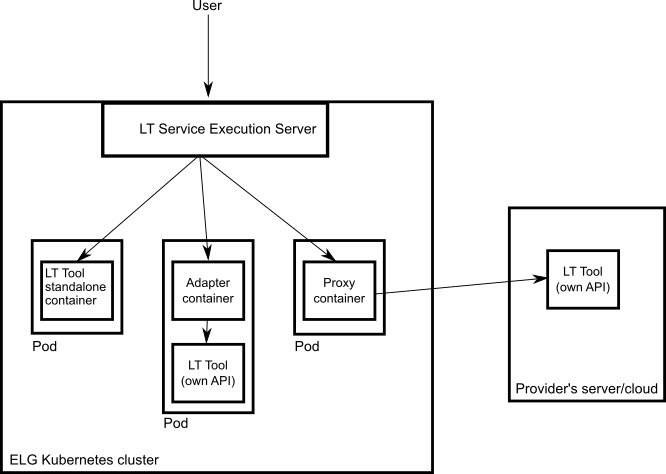

An overview of the ELG platform is depicted below.

The following bullets summarize how LT services are deployed and invoked in ELG.

All LT Services (as well as all the other ELG components) are deployed (run as containers) on a Kubernetes (k8s) cluster; k8s is a system for automating deployment, scaling, and management of containerised applications.

All LT Services are integrated into ELG via the LT Service Execution Orchestrator/Server. This server exposes a common public REST API (Representational state transfer) used for invoking any of the deployed backend LT Services. The public API is used from ELG’s Trial UIs that are embedded in the ELG Catalogue; it can also be invoked from the command line or any programming language; see Use an LT service section for more information. Some of the HTTP endpoints that are offered in the API are given below; for more information see Public LT API specification.

Endpoint

Type

Consumes

Produces

https://{domain}/execution/processText/{ltServiceID}

POST

‘application/json’

‘application/json’

https://{domain}/execution/processText/{ltServiceID}

POST

‘text/plain’ or ‘text/html’

‘application/json’

https://{domain}/execution/processAudio/{ltServiceID}

POST

‘audio/x-wav’ or ‘audio/wav’

‘application/json’

https://{domain}/execution/processAudio/{ltServiceID}

POST

‘audio/mpeg’

‘application/json’

{domain} is ‘live.european-language-grid.eu’ and {ltServiceID} is the ID of the backend LT service. This ID is assigned/configured during registration; see section 3. Register the service at ELG - ‘LT Service is deployed to ELG and configured’ step.

Note

The REST API that is exposed from an LT Service X (see above) is for the communication between the LT Service Execution Orchestrator Server and X (ELG internal API - see Internal LT Service API specification).

When the LT Service Execution Orchestrator receives a processing request for service X, it retrieves from the database X’s k8s REST endpoint and sends a request to it. This endpoint is configured/specified during the registration process; see section 3. Register the service at ELG - ‘LT Service is deployed to ELG and configured’ step. When the Orchestrator gets the response from the LT Service, it returns it to the application/client that sent the initial call.

0. Before you start¶

Please make sure that the service you want to contribute complies with our terms of use.

Please make sure you have registered and been assigned the provider role.

Please make sure that your service meets the technical requirements below, and choose one of the three integration options.

Technical requirements and integration options¶

The requirements for integrating an LT tool/service to ELG are the following:

Expose an ELG compatible endpoint: You MUST create an application that exposes an HTTP endpoint for the provided LT tool(s). The application MUST consume (via the aforementioned HTTP endpoint) requests that follow the ELG JSON format, call the underlying LT tool and produce responses again in the ELG JSON format. For a detailed description of the JSON-based HTTP protocol (ELG Internal LT API) that you have to implement, see the Internal LT API specification.

Dockerisation: You MUST dockerise the application and upload the respective image(s) in a Docker Registry, such as GitLab, DockerHub, Azure Container Registry etc. You MAY select out of the three following options, the one that best fits your needs:

LT tools packaged in one standalone image: One docker image is created that contains the application that exposes the ELG-compatible endpoint and the actual LT tool.

LT tools running remotely outside the ELG infrastructure: For these tools, one proxy image is created that exposes one (or more) ELG-compatible endpoints; the proxy container communicates with the actual LT service that runs outside the ELG infrastructure.

LT tools requiring an adapter: For tools that already offer an image that exposes a non-ELG compatible endpoint (HTTP-based or other), a second adapter image SHOULD be created that exposes an ELG-compatible endpoint and acts as proxy to the container that hosts the actual LT tool.

In the following diagram the three different options for integrating a LT tool are shown:

1. Dockerize your service¶

Build/Store Docker images¶

Ideally, the source code of your LT tool/service already resides on GitLab where a built-in Continuous Integration (CI) Runner can take care of building the image. GitLab also offers a container registry that can be used for storing the built image. For this, you need to add at the root level of your GitLab repository a .gitlab-ci.yml file as well as a Dockerfile, i.e, the recipe for building the image. Here you can find an example. After each new commit, the CI Runner is automatically triggered and runs the CI pipeline that is defined in .gitlab-ci.yml.

You can see the progress of the pipeline on the respective page in GitLab UI (“CI / CD -> Jobs”); when it completes successfully, you can also find the image at “Packages -> Container Registry”.

Your image can also be built and tagged in your machine by running the docker build command. Then it can be uploaded (with docker push) to the GitLab registry,

DockerHub (which is a public Docker registry) or any other Docker registry.

For instance, for this GitLab hosted project, the commands would be:

docker login registry.gitlab.com

for logging in and be allowed to push an image

docker build -t registry.gitlab.com/european-language-grid/dfki/elg-jtok

for building an image (locally) for the project - please note that before running docker build you have to download (clone) a copy of the project and be at the top-level directory (elg-jtok)

docker push registry.gitlab.com/european-language-grid/dfki/elg-jtok

for pushing the image to GitLab.

In the following links you can find some more inforrmation on docker commands plus some examples:

Dockerization of a Python-based LT tool¶

An example of a Python-based LT tool: Python-based example.

Dockerization of a Java-based tool¶

A Spring Boot starter to make it as easy as possible to create ELG-compliant tools in Java is provided at: ELG Spring Boot Starter.

2. Describe the service¶

Metadata overview¶

The service must be described according to the ELG schema and comply at least with the minimal version. The metadata elements that you need to provide for the service comprise a set of elements organized (for presentation purposes) into the following groups:

You will find all the mandatory and recommended elements for all tools/services in the above sections.

Please, note that for ELG integrated services, you MUST include in your metadata record a SoftwareDistribution component with the following elements:

SoftwareDistributionForm(Mandatory): The medium, delivery channel or form (e.g., source code, API, web service, etc.) through which a software object is distributed. For ELG integrated services, use the valuehttp://w3id.org/meta-share/meta-share/dockerImage.dockerDownloadLocation(Mandatory if applicable): A location where the the LT tool docker image is stored. Add the location from where the ELG team can download the docker image in order to test it.serviceAdapterDownloadLocation(Mandatory if applicable): Τhe URL where the docker image of the service adapter can be downloaded from. Required only for ELG integrated services implemented with an adapter.executionLocation(Mandatory): A URL where the resource (mainly software) can be directly executed. Add here the REST endpoint at which the LT tool is exposed within the Docker image.additionalHwRequirements(Mandatory if applicable): A short text where you specify additional requirements for running the service, e.g. memory requirements, etc. The recommended format for this is: ‘limits_memory: X limits_cpu: Y’

Examples¶

Example 1: Information Extraction service¶

ANNIE’s Named Entity Recognizer published at: https://live.european-language-grid.eu/catalogue/#/resource/service/tool/512

The Docker image for this service is stored at the GitLab registry.

Example 2: Machine Translation service¶

Edinburgh’s German to English MT engine published at: https://live.european-language-grid.eu/catalogue/#/resource/service/tool/623

The Docker image for this service is stored at DockerHub.

3. Register the service at ELG¶

The current release of ELG offers two options for registering a resource:

A. the ELG interactive editor (see Use the interactive editor)

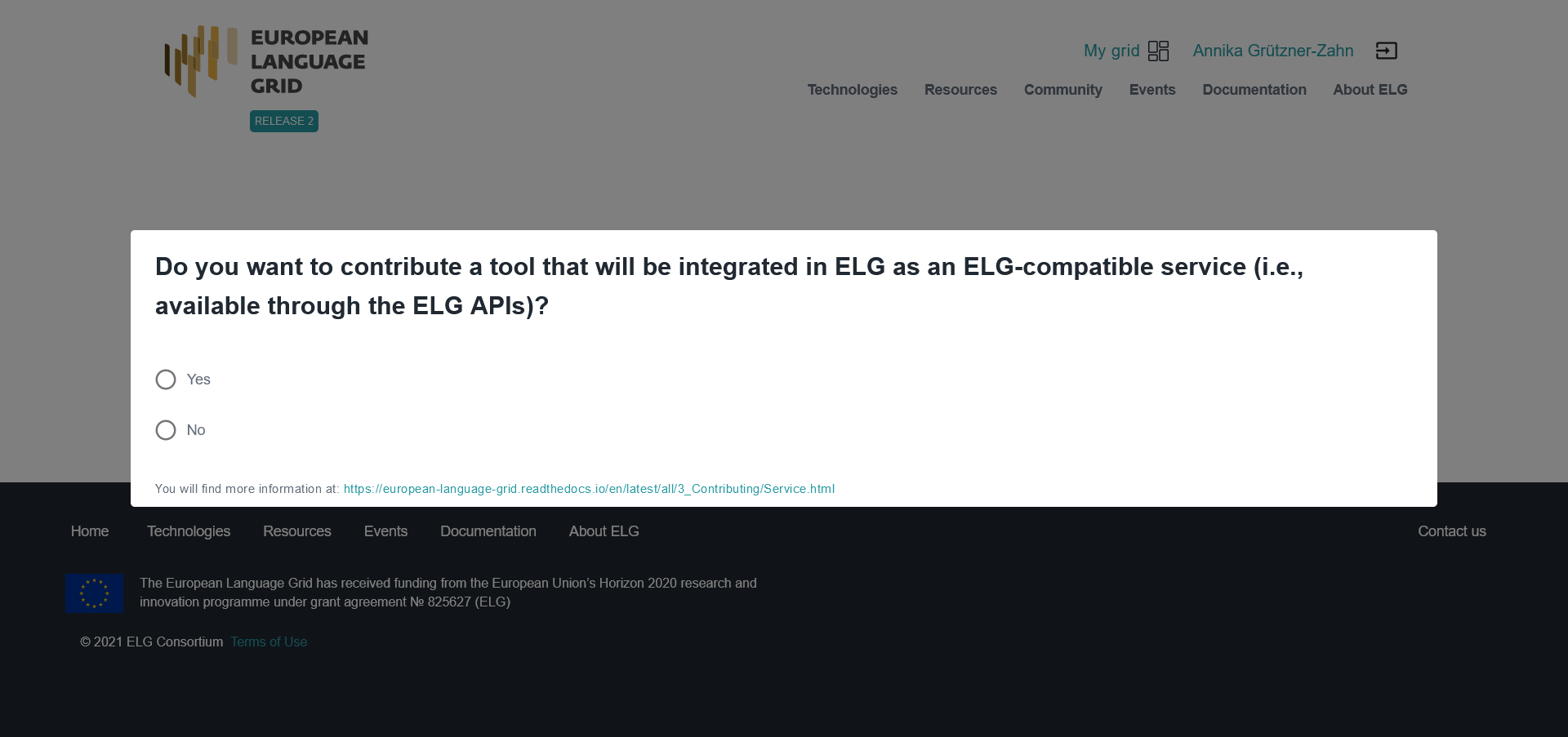

For services integrated at ELG, you MUST select the “Service or Tool” form and register it as an “ELG-compatible service”. At the start pages of the interactive editor, when prompted, select “Yes”.

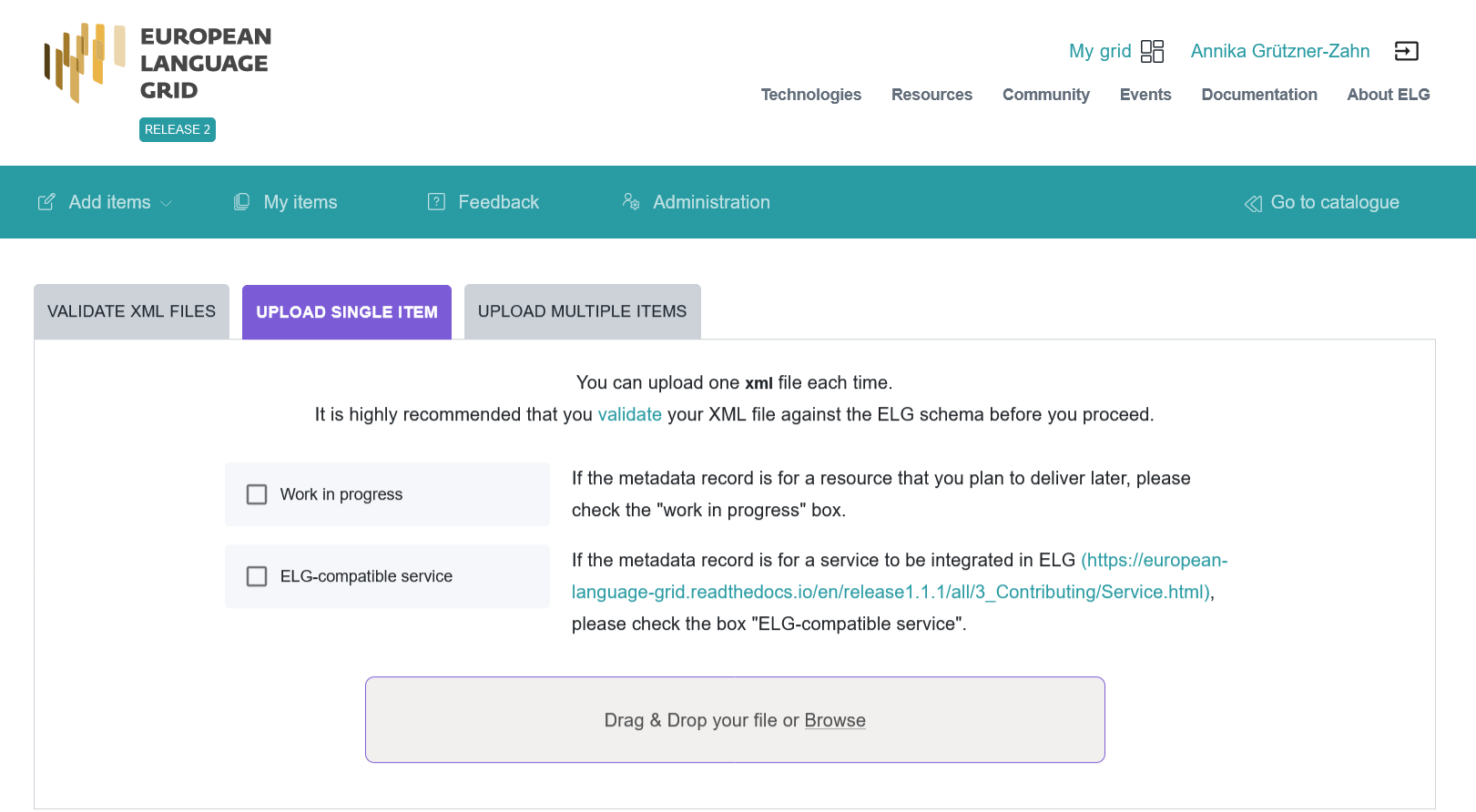

B. the upload of a metadata file that conforms to the ELG schema (see Create and upload metadata files).

For ELG-compatible services, you MUST check the box next to “ELG-compatible” at the upload page.

4. Manage and submit the service for publication¶

Through the “My items” page you can access your metadata record (see Manage your items) and edit it until you are satisfied. You can then submit it for publication, in line with the publication lifecycle defined for ELG metadata records.

At this stage, the metadata record can no longer be edited and is only visible to you and to us, the ELG platform administrators.

Before it is published, the service undergoes a validation process, which is described in detail at CHAPTER 4: VALIDATING ITEMS.

During this process, the service is deployed to ELG, configured and tested to ensure it conforms to the ELG technical specifications. We describe here the main steps in this process:

LT Service is deployed to ELG and configured: The LT service is deployed (by the validator) to the k8s cluster by creating the appropriate configuration YAML file and uploading to the respective GitLab repository. The CI/CD pipeline that is responsible for deployments will automatically install the new service at the k8s cluster. If you request it, a separate dedicated k8s namespace can be created for the LT service before creating the YAML file. The validator of the service assigns to it:

the k8s REST endpoint that will be used for invoking it, according to the following template:

http://{k8s service name for the registered LT tool}.{k8s namespace for the registered LT tool}.svc.cluster.local{the path where the REST service is running at}. The{the path where the REST service is running at}part can be found in theexecutionLocationfield in the metadata. For instance, for the Edinburgh’s MT tool above it is ‘/api/elg/v1’.An ID that will be used to call it.

Which “try out” UI will be used for testing it and visualizing the returned results.

LT Service is tested: On the LT landing page, there is a “Try out” tab and a “Code samples” tab, which can both be used to test the service with some input; see Use an LT service section. The validator can help you identify integration issues and resolve them. This process is continued until the LT service is correctly integrated to the platform. The procedure may require access to the k8s cluster for the validator (e.g., to check containers start-up/failures, logs, etc.).

LT Service is published: When the LT service works as expected, the validator will approve it; the metadata record is then published and visible to all ELG users through the catalogue.

Frequently asked questions¶

executionLocation? For example, an IE tool has to expose a specific path or use a specific port?http://{k8s service name for the registered LT tool}.{k8s namespace for the registered LT tool}.svc.cluster.local{the path where the REST service is running at}, which assumes that the service is exposed to port 80.dockerDownloadLocation) and each of them has to listen in a different HTTP endpoint (executionLocation) but on the same port (for simplicity). E.g, “http://localhost:8080/NamedEntityRecognitionEN”, “http://localhost:8080/NamedEntityRecognitionDE”.:latest one.additionalHWRequirements metadata element (see the MT example above) or by communicating with the ELG administrators.